PostgreSQL

/pg138

A place to discuss the PostgreSQL RDBMS

wow!

I didn't realize AWS has a mechanism for taking consistent snapshots across EBS volumes.

https://aws.amazon.com/blogs/storage/taking-crash-consistent-snapshots-across-multiple-amazon-ebs-volumes-on-an-amazon-ec2-instance/

I didn't realize AWS has a mechanism for taking consistent snapshots across EBS volumes.

https://aws.amazon.com/blogs/storage/taking-crash-consistent-snapshots-across-multiple-amazon-ebs-volumes-on-an-amazon-ec2-instance/

Fascinating to think about optimizing loops for CPUs. I wonder if PG's numeric additions could benefit from a more branchless approach

https://github.com/postgres/postgres/blob/4f15759bdcddd23e874526a6b2c0ff86e0beb042/src/interfaces/ecpg/pgtypeslib/numeric.c#L637-L754

https://15721.courses.cs.cmu.edu/spring2023/slides/06-execution.pdf

https://github.com/postgres/postgres/blob/4f15759bdcddd23e874526a6b2c0ff86e0beb042/src/interfaces/ecpg/pgtypeslib/numeric.c#L637-L754

https://15721.courses.cs.cmu.edu/spring2023/slides/06-execution.pdf

UPDATE heavy workloads on PG are known to scatter rows around on pages which may increase IO when scanning many rows in a single query. Unless you are getting 100% HOT updates (most aren't).

To solve this in the past, I've used CLUSTER -- which isn't great because it requires a lock and takes a long time. It's almost unusable.

pg_repack solves this by doing online re-clustering and storage reclamation.

https://reorg.github.io/pg_repack/

To solve this in the past, I've used CLUSTER -- which isn't great because it requires a lock and takes a long time. It's almost unusable.

pg_repack solves this by doing online re-clustering and storage reclamation.

https://reorg.github.io/pg_repack/

https://www.cs.cmu.edu/~pavlo/blog/2023/04/the-part-of-postgresql-we-hate-the-most.html

Spoiler: MVCC

This also helps bring awareness to the impact of UPDATE heavy workloads on PG

Spoiler: MVCC

This also helps bring awareness to the impact of UPDATE heavy workloads on PG

A rebuttal to Uber’s PG/MySQL paper.

Keep in mind, PG has changed since this discussion. A lot of the replication issues are long since put to rest.

The storage sub-systems are the interesting point of difference between these systems. MYSQL’s indexes point to primary keys instead of a CTID. An update to a MySQL row will have same storage location but PG will be a new CTID/page — unless it was a Heap Only Tuple (HOT) update. But this means PG can reduce IO for queries.

As with anything, it’s always a tradeoff. However, database systems in particular are especially sensitive to workload details. The tradeoffs really matter!

https://thebuild.com/presentations/uber-perconalive-2017.pdf

Keep in mind, PG has changed since this discussion. A lot of the replication issues are long since put to rest.

The storage sub-systems are the interesting point of difference between these systems. MYSQL’s indexes point to primary keys instead of a CTID. An update to a MySQL row will have same storage location but PG will be a new CTID/page — unless it was a Heap Only Tuple (HOT) update. But this means PG can reduce IO for queries.

As with anything, it’s always a tradeoff. However, database systems in particular are especially sensitive to workload details. The tradeoffs really matter!

https://thebuild.com/presentations/uber-perconalive-2017.pdf

Using stored procedures for complex query patterns makes sense, but I don't have an intuition for the kind of query complexity necessary before seeing tangible benefits switching from prepared statements.

Glad someone is experimenting! https://github.com/pg-nano/pg-nano/

Glad someone is experimenting! https://github.com/pg-nano/pg-nano/

initial thoughts on AWS DSQL

1. PG compatible (pg is dominant at this point)

2. Most of the article talked about HA

3. AWS has a proprietary, internal distributed transaction log service that is supposedly the most important piece of AWS and everything in AWS depends on it. Hope that teams makes 10M annual tc each.

4. It depresses me to think that the future of compute and storage is: run it on aws

It's really neat to think about having a network storage layer that can support many database frontends. this seems like the future. Neon et al. are moving in this direction. Also something that Stonebraker has been talking about for a while now.

https://aws.amazon.com/blogs/database/introducing-amazon-aurora-dsql/

1. PG compatible (pg is dominant at this point)

2. Most of the article talked about HA

3. AWS has a proprietary, internal distributed transaction log service that is supposedly the most important piece of AWS and everything in AWS depends on it. Hope that teams makes 10M annual tc each.

4. It depresses me to think that the future of compute and storage is: run it on aws

It's really neat to think about having a network storage layer that can support many database frontends. this seems like the future. Neon et al. are moving in this direction. Also something that Stonebraker has been talking about for a while now.

https://aws.amazon.com/blogs/database/introducing-amazon-aurora-dsql/

https://avi.im/blag/2024/zero-disk-architecture/

This is a really neat idea. Low latency, CAS, and append are killer S3 features.

But kind of dystopian to think that all our data is owned and operated by a single US company.

WDYT?

This is a really neat idea. Low latency, CAS, and append are killer S3 features.

But kind of dystopian to think that all our data is owned and operated by a single US company.

WDYT?

It's quite rich that the "Tablespaces" section follows the "Destroying a Database" section since misconfiguring table spaces is an excellent way to absolutely hose your database.

pg_column_size is nice. I knew a bigint was 8 bytes, but I was curious to see the storage size for comparable numeric data.

What every programmer should know about solid-state drives

https://codecapsule.com/2014/02/12/coding-for-ssds-part-6-a-summary-what-every-programmer-should-know-about-solid-state-drives/

https://codecapsule.com/2014/02/12/coding-for-ssds-part-6-a-summary-what-every-programmer-should-know-about-solid-state-drives/

I'm behind the times—apparently folks have been running Postgres on unikernels for quite some time†.

https://www.prisma.io/blog/announcing-prisma-postgres-early-access

https://nanovms.com/dev/tutorials/running-postgres-as-a-unikernel †

https://www.prisma.io/blog/announcing-prisma-postgres-early-access

https://nanovms.com/dev/tutorials/running-postgres-as-a-unikernel †

Prisma Postgres®: Building a Modern PostgreSQL Service Using Unikernels & MicroVMs

At Prisma, we believe that deploying a database should be as simple as adding a new page in Notion. Today, we are excited to share the first milestone towards this vision: Prisma Postgres® gives developers an always-on database with pay-as-you-go pricing, thanks to our unique architecture design.

www.prisma.io

Running Postgres as a Unikernel

This isn't the first time we took a look at running postgres as a unikernel. A few years ago one of our engineers took a look at how difficult it might be to port it.

nanovms.com

Damn.

Screen shot from this talk on PG/ZFS

https://people.freebsd.org/%7Eseanc/postgresql/scale15x-2017-postgresql_zfs_best_practices.pdf

Screen shot from this talk on PG/ZFS

https://people.freebsd.org/%7Eseanc/postgresql/scale15x-2017-postgresql_zfs_best_practices.pdf

Config for running PG on ZFS

https://vadosware.io/post/everything-ive-seen-on-optimizing-postgres-on-zfs-on-linux/

https://vadosware.io/post/everything-ive-seen-on-optimizing-postgres-on-zfs-on-linux/

Fun PG-related meetup for the SF folks

1229

Harry

@htormey·17:05 30/10/2024

Hosting a panel of expert speakers from engineering at Roblox, YugabyteDB and Coinbase tonight in SF. The subject is SQL at scale, if you are interested in joining signup below. Should be an interesting discussion. https://lu.ma/rcr09cp4

I love these 5 minutes of Postgres videos. Here's a good one on upcoming IO changes:

https://www.youtube.com/watch?v=QAYzWAlxCYc

https://www.youtube.com/watch?v=QAYzWAlxCYc

Did you know that Postgres was originally written in LISP? (and C)

"We expected that it would be especially easy to write the optimizer and inference engine in LISP, since both are mostly tree processing modules"

https://dsf.berkeley.edu/papers/ERL-M90-34.pdf

"We expected that it would be especially easy to write the optimizer and inference engine in LISP, since both are mostly tree processing modules"

https://dsf.berkeley.edu/papers/ERL-M90-34.pdf

PostgreSQL's C driver is called: libpq

What's PQ?

Q was short for QUEL. QUEL is a dead query language once used by Ingress. PostgreSQL was post-Ingress.

At one time PG supported a query language called PostQUEL.

What's PQ?

Q was short for QUEL. QUEL is a dead query language once used by Ingress. PostgreSQL was post-Ingress.

At one time PG supported a query language called PostQUEL.

https://www.depesz.com/2022/07/05/understanding-pg_stat_activity/

1) Depsez is pg legend

2) This is a great explainer on pg_stat_activity

1) Depsez is pg legend

2) This is a great explainer on pg_stat_activity

just added a new chain to @indexsupply and used PG 17!

eager to see how the new vector IO impacts table scans

eager to see how the new vector IO impacts table scans

Very slick: https://postgres.new

Now make a system that takes your schema and inspects pg_stat_statements on your production system and makes actionable recommendations of migrations to improve performance.

Now make a system that takes your schema and inspects pg_stat_statements on your production system and makes actionable recommendations of migrations to improve performance.

Fantastic talk on PG's share buffers -- and memory in general

https://www.youtube.com/watch?v=u-r8VuzXeBE

https://www.youtube.com/watch?v=u-r8VuzXeBE

One of those ideas that sounds good at first but upon deeper reflection reveals otherwise (project abandoned). Might still be useful for querying information about your infrastructure, but not for creating.

https://iasql.com

https://iasql.com

Supabase CEO on user complaints the self hosted version is limited and sucks compared to the cloud version

https://www.reddit.com/r/selfhosted/s/v02qg93ao9

https://www.reddit.com/r/selfhosted/s/v02qg93ao9

How does Postgres manage to do this? 🧐

SELECT S

WHERE W

ORDER BY O

> takes 0.193s

-----

SELECT S

WHERE W

ORDER BY O

LIMIT 20

> takes 74.129s

SELECT S

WHERE W

ORDER BY O

> takes 0.193s

-----

SELECT S

WHERE W

ORDER BY O

LIMIT 20

> takes 74.129s

I enjoy using PGTune when setting up a fresh pg server. Maybe you will too:

https://pgtune.leopard.in.ua

https://pgtune.leopard.in.ua

An enjoyable read on how PG encodes NUMERIC data:

https://github.com/postgres/postgres/blob/05a5a1775c89f6beb326725282e7eea1373cbec8/src/backend/utils/adt/numeric.c#L253-L303

https://github.com/postgres/postgres/blob/05a5a1775c89f6beb326725282e7eea1373cbec8/src/backend/utils/adt/numeric.c#L253-L303

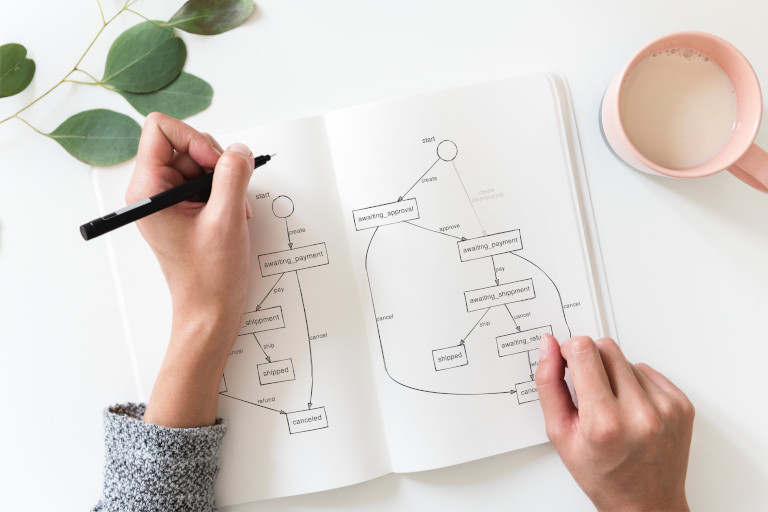

FSMs as a core data primitive are going to get more popular. Combined with a runtime library for your preferred language, and you have a way to formally verify statements about business logic in your system.

https://raphael.medaer.me/2019/06/12/pgfsm.html

https://raphael.medaer.me/2019/06/12/pgfsm.html

Anyone got a feature / fix that they are eager to see land in 17?

Here are a few I'm looking forward to:

- COPY option ON_ERROR ignore to discard error rows

- to_bin() and to_oct()

Here are a few I'm looking forward to:

- COPY option ON_ERROR ignore to discard error rows

- to_bin() and to_oct()

Impressive tool from Xata providing a solution for zero-downtime, REVERSIBLE (!!!) migrations—the holy grail.

Excited for this to eventually reach v1. Current feature set is very impressive, but it's not yet ready to handle all possible migrations just yet. Something to keep an eye on.

https://xata.io/blog/pgroll-schema-migrations-postgres

Excited for this to eventually reach v1. Current feature set is very impressive, but it's not yet ready to handle all possible migrations just yet. Something to keep an eye on.

https://xata.io/blog/pgroll-schema-migrations-postgres

Great post on how PG lock behavior during schema changes. It's surprising (but obvious in hindsight) how a statement that obtains a lock which doesn't conflict with a schema change could still be blocked by another statement that does obtain a blocking lock, due to the FIFO nature of the lock queue.

https://xata.io/blog/migrations-and-exclusive-locks

https://xata.io/blog/migrations-and-exclusive-locks

Should be some good perf. improvements with v17

https://www.postgresql.org/docs/17/release-17.html#RELEASE-17-OPTIMIZER

in particular, some CTEs should get faster

https://www.postgresql.org/docs/17/release-17.html#RELEASE-17-OPTIMIZER

in particular, some CTEs should get faster

A CTE (WITH) can be MATERIALIZED or NOT MATERIALIZED.

When MATERIALIZED, the query is computed only once for the outer query. Good for reducing work. Not good when you reference the CTE multiple times with different predicates.

NOT MATERIALIZED forces PG to "inline" the CTE which allows predicate push down but possibly duplicates work.

The default is MATERIALIZED when a CTE is referenced more than once.

https://www.postgresql.org/docs/current/queries-with.html#QUERIES-WITH-CTE-MATERIALIZATION

When MATERIALIZED, the query is computed only once for the outer query. Good for reducing work. Not good when you reference the CTE multiple times with different predicates.

NOT MATERIALIZED forces PG to "inline" the CTE which allows predicate push down but possibly duplicates work.

The default is MATERIALIZED when a CTE is referenced more than once.

https://www.postgresql.org/docs/current/queries-with.html#QUERIES-WITH-CTE-MATERIALIZATION

TIL pg source has a helpful README for the Executor

https://github.com/postgres/postgres/blob/master/src/backend/executor/README

https://github.com/postgres/postgres/blob/master/src/backend/executor/README

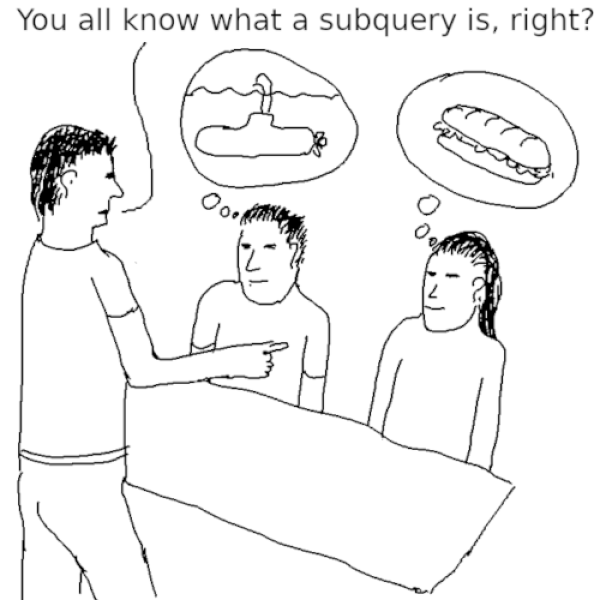

Good article on correlated / uncorrelated subqueries:

https://www.cybertec-postgresql.com/en/subqueries-and-performance-in-postgresql/

https://www.cybertec-postgresql.com/en/subqueries-and-performance-in-postgresql/

without looking it up, please guess the following limits:

1. relations per database

2. relation size

1. relations per database

2. relation size

I really enjoy using _actual_ postgres servers in my tests. Packages like this one: https://github.com/theseus-rs/postgresql-embedded make it fast and easy!

With an unlogged table, Postgres is fast as a cache. Downside is unlogged tables aren't replicated, and so this pattern doesn't scale.

https://www.cybertec-postgresql.com/en/postgresql-vs-redis-vs-memcached-performance/

https://www.cybertec-postgresql.com/en/postgresql-vs-redis-vs-memcached-performance/

What's a good rule of thumb for shared_buffers size? 25% of RAM?

Excellent overview of all the different kinds of lock in Postgres and the various SQL statements that invoke them:

https://medium.com/@hnasr/postgres-locks-a-deep-dive-9fc158a5641c

https://medium.com/@hnasr/postgres-locks-a-deep-dive-9fc158a5641c

PG incremental base backups seem really neat:

https://pganalyze.com/blog/5mins-postgres-17-incremental-backups

https://pganalyze.com/blog/5mins-postgres-17-incremental-backups

I sometimes find myself thinking: this would a great case for PG ARRAYs

But it always comes back to bite me

But it always comes back to bite me

Postgres in the browser. This space is starting to get really interesting.

https://github.com/electric-sql/pglite

https://github.com/electric-sql/pglite

most projects that "embed" pg binaries into the test suites end up using this one maven repo: https://mvnrepository.com/artifact/io.zonky.test.postgres

ZONKY

ZONKY

Postgres C hits different:

fctx->tupdesc = BlessTupleDesc(tupdesc);

https://github.com/postgres/postgres/blob/f4fdc24aa35c2268f519905a3a66658ebd55a466/src/backend/executor/execTuples.c#L2149-L2165

fctx->tupdesc = BlessTupleDesc(tupdesc);

https://github.com/postgres/postgres/blob/f4fdc24aa35c2268f519905a3a66658ebd55a466/src/backend/executor/execTuples.c#L2149-L2165

)